I have two directories with thousands of files which contain more or less the same files.

How can I copy all files from dirA to dirB which are not in dirB or if the file exists in dirB only overwrite it if it's smaller.

I know there are a lot of examples for different timestamp or different file size but I only want to overwrite if the destination file is smaller and under no circumstances if it's bigger.

Background of my problem:

I've rendered a dynmap on my Minecraft Server but some of the tiles are missing or corrupted. Then I did the rendering again on another machine with a faster CPU and copied all the new rendered files (~50GB / 6.000.000 ~4-10 KB PNGs) on my server. After that I noticed that there are also corrupted files in my new render.

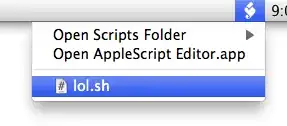

left: old render, right: new render

Therefor I don't want to overwrite all files but only the ones which are bigger (the corrupted carry less data and are smaller).