Here in early 2017, judging from information readily available online, it looks like the fastest SATA HDDs max out at around 220 MegaBytes/sec, which is 1.760 Gigabits/sec.

So if you're just trying to beat the speed of a single drive, and you're limited to HDDs for cost-per-terabyte considerations for huge video files then, 10 Gigabit Ethernet is plenty.

As an aside, note that Thunderbolt Networking is 10 Gigabit/sec as well, so if you already have Thunderbolt ports, you can experiment with that. It could conceivably beat your 6 Gigabit eSATA 3 ports, although I'm not sure about that because eSATA is very storage-specific, whereas doing storage over Ethernet has more overhead. Also note that Thunderbolt is a desktop bus; it only reaches a few meters, not the 100m that 10 Gigabit Ethernet can handle. So while Thunderbolt may be interesting for experimenting and prototyping while you weigh your options, it's probably not the right long-term solution for you unless you want to keep all your workstations and disks connected back-to-back around a large table.

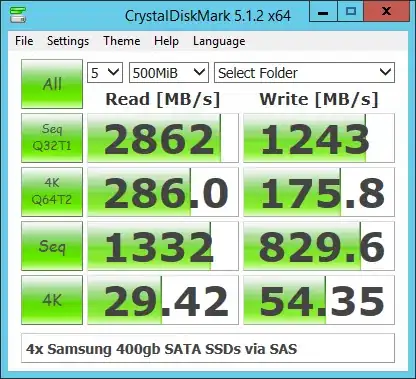

So that was for single HDDs. But if you RAID those drives together so that every read or write gets spread out across multiple drives, you can get much better performance than the single drive performance. Also, depending on your budget, you could put PCIe/M.2 NVME SSDs into a PC to act as a server/NAS, and you can get blazing fast storage performance (around 3.4 GigaByte/sec == 27 gigabit/sec) per drive.

In that case you might want to look at something faster than 10 Gigabit Ethernet, but glancing around online, it looks like prices jump quite dramatically beyond 10 Gigabit Ethernet. So you might want to look at doing link aggregation across multiple 10Gigabit links. I've also seen some anecdotes online that used network equipment, such as used 40Gbps InfiniBand stuff, can be bought on eBay for dirt cheap, if you don't mind the hassles that come with buying used gear on eBay.