How can I download all pages from a website?

Any platform is fine.

How can I download all pages from a website?

Any platform is fine.

HTTRACK works like a champ for copying the contents of an entire site. This tool can even grab the pieces needed to make a website with active code content work offline. I am amazed at the stuff it can replicate offline.

This program will do all you require of it.

Happy hunting!

Wget is a classic command-line tool for this kind of task. It comes with most Unix/Linux systems, and you can get it for Windows too. On a Mac, Homebrew is the easiest way to install it (brew install wget).

You'd do something like:

wget -r --no-parent http://example.com/songs/

For more details, see Wget Manual and its examples, or e.g. these:

Use wget:

wget -m -p -E -k www.example.com

The options explained:

-m, --mirror Turns on recursion and time-stamping, sets infinite

recursion depth, and keeps FTP directory listings.

-p, --page-requisites Get all images, etc. needed to display HTML page.

-E, --adjust-extension Save HTML/CSS files with .html/.css extensions.

-k, --convert-links Make links in downloaded HTML point to local files.

-np, --no-parent Don't ascend to the parent directory when retrieving

recursively. This guarantees that only the files below

a certain hierarchy will be downloaded. Requires a slash

at the end of the directory, e.g. example.com/foo/.

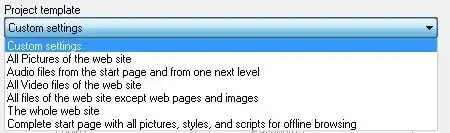

Internet Download Manager has a Site Grabber utility with a lot of options - which lets you completely download any website you want, the way you want it.

You can set the limit on the size of the pages/files to download

You can set the number of branch sites to visit

You can change the way scripts/popups/duplicates behave

You can specify a domain, only under that domain all the pages/files meeting the required settings will be downloaded

The links can be converted to offline links for browsing

You have templates which let you choose the above settings for you

The software is not free however - see if it suits your needs, use the evaluation version.

You should take a look at ScrapBook, a Firefox extension. It has an in-depth capture mode.

I like Offline Explorer.

It's a shareware, but it's very good and easy to use.

Teleport Pro is another free solution that will copy down any and all files from whatever your target is (also has a paid version which will allow you to pull more pages of content).

While wget was already mentioned this resource and command line was so seamless I thought it deserved mention:

wget -P /path/to/destination/directory/ -mpck --user-agent="" -e robots=off --wait 1 -E https://www.example.com/

Try BackStreet Browser.

It is a free, powerful offline browser. A high-speed, multi-threading website download and viewing program. By making multiple simultaneous server requests, BackStreet Browser can quickly download entire website or part of a site including HTML, graphics, Java Applets, sound and other user definable files, and saves all the files in your hard drive, either in their native format, or as a compressed ZIP file and view offline.

For Linux and OS X: I wrote grab-site for archiving entire websites to WARC files. These WARC files can be browsed or extracted. grab-site lets you control which URLs to skip using regular expressions, and these can be changed when the crawl is running. It also comes with an extensive set of defaults for ignoring junk URLs.

There is a web dashboard for monitoring crawls, as well as additional options for skipping video content or responses over a certain size.

How can I download an entire website?

In my case, I wanted to download not an entire website, but just a subdomain, including all its subdomains.

As an example, I tried :

wget -E -k -m -np -p https://www.mikedane.com/web-development/html/

which worked just fine. 1

In my experience, this doesn't always get all the subdomains or PDFs, but I did get a fully functional copy that works fine offline.

Here are the meanings of the flags I used, according to the Linux man page : 2

-E – will cause the suffix .html to be appended to the local filename

-k – converts the links to make them suitable for local viewing

-m – turns on recursion and time-stamping, infinite recursion depth

-np – only the files below a certain hierarchy will be downloaded

-p – download all files necessary to properly display the pages

1

If you try it, expect the download to be about 793 KiB.

In a previous version, I had index.html at the end of the URL.

This is unnecessary. It might even make the download fail.

2

Concerning the -np flag, the exception is when there are

dependencies outside the hierarchy.

For example, I made a download for which the referred CSS files are

in a different subdomain.

Yet, the subdomain that has the CSS files was also downloaded,

which is what we want, of course.

You can use below free online tools which will make a zip file of all contents included in that URL:

Cyotek WebCopy seems to be also a good alternative. For my situation, trying to download a DokuWiki site, it currently seems to lack support for CSRF/SecurityToken. Thats why I actually went for Offline Explorer as stated already in answer above.

A1 Website Download for Windows and Mac is yet another option. The tool has existed for nearly 15 years and has been continuously updated. It features separate crawl and download filtering options with each supporting pattern matching for "limit to" and "exclude".

The venerable FreeDownloadManager.org has this feature too.

Free Download Manager has it in two forms in two forms: Site Explorer and Site Spider:

Site Explorer

Site Explorer lets you view the folders structure of a web site and easily download necessary files or folders.

HTML Spider

You can download whole web pages or even whole web sites with HTML Spider. The tool can be adjusted to download files with specified extensions only.

I find Site Explorer is useful to see which folders to include/exclude before you attempt attempt to download the whole site - especially when there is an entire forum hiding in the site that you don't want to download for example.

I believe google chrome can do this on desktop devices, just go to the browser menu and click save webpage.

Also note that services like pocket may not actually save the website, and are thus susceptible to link rot.

Lastly note that copying the contents of a website may infringe on copyright, if it applies.