Is there any easy way to find all files in a particular directory that have any non-ASCII (ie. Unicode) characters in the filename? I'm running Windows XP x64 SP2, NTFS filesystem.

-

If the filename contains no bytes above 0x7F, then it's ASCII. That doesn't mean it's Unicode, though. You should be able to this in Python as well as many other programming languages. – martineau Jan 25 '11 at 11:55

4 Answers

Here's a method using Powershell:

gci -recurse . | where {$_.Name -cmatch "[^\u0000-\u007F]"}

- 531

- 4

- 7

-

you can use this as a *batch* file: ```powershell -Command "gci -recurse . | where {$_.Name -match '[^\u0000-\u007F]'}"``` – Behrouz.M Sep 19 '17 at 10:32

-

This answer is excellent and still relevant. What would make it even better (though the asker didn't request it) is an option to *replace* or *remove* those illegal characters. – Thomas Apr 23 '18 at 23:46

-

2Figured it out `gci -recurse -force | where {$_.Name -match "[^\u0000-\u007F]"} | rename-item -newname { $_.name -replace "[^\u0000-\u007F]",''}` – Thomas Apr 24 '18 at 00:14

-

-

2@js2010 Based on your comment and answer, I updated the command to use "-cmatch". – Cheran Shunmugavel Mar 15 '20 at 22:26

-

I ended up writing a Python script for this. Posting it in case it helps anyone. Feel free to move to StackOverflow.

import sys, os

def main(argv):

if len(argv) != 2:

raise Exception('Syntax: FindUnicodeFiles.py <directory>')

startdir = argv[1]

if not os.path.isdir(startdir):

raise Exception('"%s" is not a directory' % startdir)

for r in recurse_breadth_first(startdir, is_unicode_filename):

print(r)

def recurse_breadth_first(dirpath, test_func):

namesandpaths = [(f, os.path.join(dirpath, f)) for f in os.listdir(dirpath)]

for (name, path) in namesandpaths:

if test_func(name):

yield path

for (_, path) in namesandpaths:

if os.path.isdir(path):

for r in recurse_breadth_first(path, test_func):

yield r

def is_unicode_filename(filename):

return any(ord(c) >= 0x7F for c in filename)

if __name__ == '__main__':

main(sys.argv)

- 4,958

- 12

- 45

- 52

-

I've tested this script and it works just like the above powershell script. https://superuser.com/a/272063/117014 – Behrouz.M Sep 19 '17 at 10:33

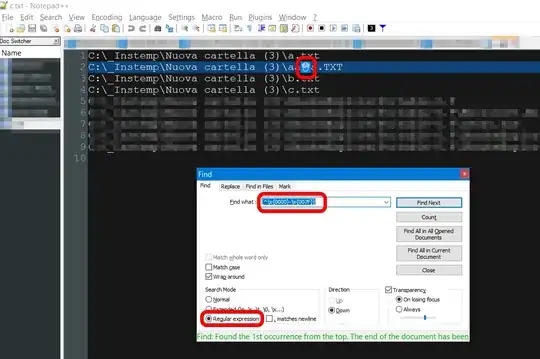

Open a command prompt cmd execute the command: chcp 65001 this will change the codepage to UTF-8

Then, create a file list: dir c:\myfolder /b /s >c:\filelist.txt

Now you have a text file, utf-8 encoded, that contain all the filenames you want to search.

Now you can load this file in Notepad++ (it's a free text editor) and search for non ascii char with a regular expression like [^\x{0000}-\x{007F}] :

- 1,079

- 8

- 11

Here's a Microsoft character class. It only works with a case sensitive match. The capital P means "not".

gci -recurse . | where { $_.name -cmatch '\P{IsBasicLatin}' } # 0000 - 007F

Or just not printable, not from space to tilde, nor tab:

gci -recurse . | where { $_.name -cmatch '[^ -~\t]' }

There's a gotcha with searching for non-ascii characters, depending on how you do it. There's 2 non-ascii characters whose lowercase versions are ascii, the Turkish İ (0x130), and Kelvin symbol K (0x212a). In this example, both small i and capital I match as non-ascii:

# powershell

echo i I | where { $_ -match '[\u0080-\uffff]' }

i

I

A workaround is to use a case sensitive search (cmatch here):

echo i I | where { $_ -cmatch '[\u0080-\uffff]' } # no output

Kelvin K matching as ascii (case insensitive):

[char]0x0212a | select-string '[\u0000-\u007f]'

K

Case sensitive works ok.

[char]0x0212a | select-string '[\u0000-\u007f]' -CaseSensitive # no output

- 575

- 5

- 6