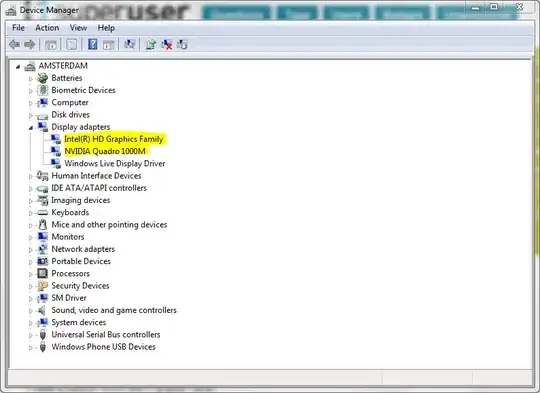

I have a Lenovo W520 laptop with two graphics cards:

I think Windows 7 (64 bit) is using my Intel graphics card ₃ which I think is integrated — because I have a low graphics rating in the Windows Experience Index. Also, the Intel card has 750MB of RAM while the NVIDIA has 2GB.

- How do I know for certain which card is Windows 7 really using?

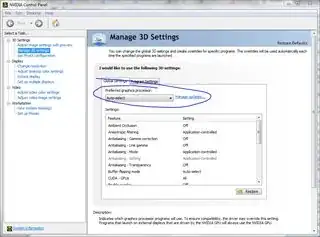

- How do I change it?

- Since this is a laptop and the display is built in, how would changing the graphics card affect the built in display?