I will like to use "find" and locate" to search for source files in my project, but they take a long time to run. Are there faster alternatives to these programs I don't know about, or ways to speed up the performance of these programs?

-

2`locate` should already be plenty fast, considering that it uses a pre-built index (the primary caveat being that it needs to be kept up to date), while `find` has to read the directory listings. – afrazier Sep 29 '11 at 13:22

-

2Which locate are you using? mlocate is faster than slocate by a long way (note that whichever package you have installed, the command is still locate, so check your package manager) – Paul Sep 29 '11 at 13:35

-

@benhsu, when I run `find /usr/src -name fprintf.c` on my OpenBSD desktop machine, it returns the locations of those source files in less than 10 seconds. `locate fprintf.c | grep '^/usr/src.*/fprintf.c$'` comes back in under a second. What is your definition of "long time to run" and how do you use `find` and `locate`? – Kusalananda Sep 29 '11 at 14:15

-

@Paul, I am using mlocate. – benhsu Sep 29 '11 at 17:15

-

@KAK, I would like to use the output of find/locate to open a file in emacs. the use case I have in mind is, I wish to edit the file, I type the file name (or some regexp matching the file name) into emacs, and emacs will use find/locate to bring up a list of files matching it, so I will like response time fast enough to be interactive (under 1 second). I have about 3 million files in $HOME, one thing I can do is make my find command prune out some of the files. – benhsu Sep 29 '11 at 17:22

8 Answers

Searching for source files in a project

Use a simpler command

Generally, source for a project is likely to be in one place, perhaps in a few subdirectories nested no more than two or three deep, so you can use a (possibly) faster command such as

(cd /path/to/project; ls *.c */*.c */*/*.c)

Make use of project metadata

In a C project you'd typically have a Makefile. In other projects you may have something similar. These can be a fast way to extract a list of files (and their locations) write a script that makes use of this information to locate files. I have a "sources" script so that I can write commands like grep variable $(sources programname).

Speeding up find

Search fewer places, instead of find / … use find /path/to/project … where possible. Simplify the selection criteria as much as possible. Use pipelines to defer some selection criteria if that is more efficient.

Also, you can limit the depth of search. For me, this improves the speed of 'find' a lot. You can use -maxdepth switch. For example '-maxdepth 5'

Speeding up locate

Ensure it is indexing the locations you are interested in. Read the man page and make use of whatever options are appropriate to your task.

-U <dir>

Create slocate database starting at path <dir>.

-d <path>

--database=<path> Specifies the path of databases to search in.

-l <level>

Security level. 0 turns security checks off. This will make

searchs faster. 1 turns security checks on. This is the

default.

Remove the need for searching

Maybe you are searching because you have forgotten where something is or were not told. In the former case, write notes (documentation), in the latter, ask? Conventions, standards and consistency can help a lot.

- 81,981

- 20

- 135

- 205

For a find replacement, check out fd. It has a simpler / more intuitive interface than the original find command, and is quite a bit faster.

- 191

- 1

- 3

A tactic that I use is to apply the -maxdepth option with find:

find -maxdepth 1 -iname "*target*"

Repeat with increasing depths until you find what you are looking for, or you get tired of looking. The first few iterations are likely to return instantaneously.

This ensures that you don't waste up-front time looking through the depths of massive sub-trees when what you are looking for is more likely to be near the base of the hierarchy.

Here's an example script to automate this process (Ctrl-C when you see what you want):

(

TARGET="*target*"

for i in $(seq 1 9) ; do

echo "=== search depth: $i"

find -mindepth $i -maxdepth $i -iname "$TARGET"

done

echo "=== search depth: 10+"

find -mindepth 10 -iname $TARGET

)

Note that the inherent redundancy involved (each pass will have to traverse the folders processed in previous passes) will largely be optimized away through disk caching.

Why doesn't find have this search order as a built-in feature? Maybe because it would be complicated/impossible to implement if you assumed that the redundant traversal was unacceptable. The existence of the -depth option hints at the possibility, but alas...

- 740

- 10

- 13

As of early 2021 I evaluated a few tools for similar use cases and integration with fzf:

tl;dr: plocate is a very fast alternative to mlocate in most cases. (GNU) find often still seems to be hard to beat for tools which don't require an index.

locate-likes (using indices)

I compared the commonly used mlocate and plocate. In a database of about 61 million files plocate answers specific queries (a couple of hundred results) in the order of 0.01 to 0.2 seconds and only becomes much slower (> 100 seconds) for very unspecific queries with millions of results. mlocate takes an almost constant 35 to 40 seconds to query the same database in all tested cases. Most of the time plocate is multiple orders of magnitude faster than mlocate.

find-likes (directory traversal)

It's harder to - sorry - locate a good find alternative and the result very much depends on the specific queries. Here are some results for just trying to find all files in a sample directory (~200.000 files) in descending speed order:

- GNU find (

find -type f): Surprisingly,findis often the fastest tool (~ 0.01 seconds) - plocate (

plocate --regexp "^$PWD") (< 0.1 seconds) - mlocate (

mlocate --regexp "^$PWD"): Interestingly faster than the non-regexp queries (~ 5 seconds) - ripgrep (

rg --files --no-ignore --hidden): A good bit faster, but still very slow (~0.5 seconds) - fd (

fdfind --type fon Debian): Not as fast as expected (~0.7 seconds) zshglobs (**/*and extended versions): Generally very slow, but can be elegant in scripts (~3 seconds)

With directory structures not cached in RAM, plocate probably beats find, especially on disks with high seek times (HDDs), but does of course require an up-to-date database.

A parallelized find might give better results on systems which profit from command queuing and can request data in parallel. Depending on use case, this can be implemented in scripts, too, by running multiple instances of find on different subtrees, but performance characteristics depend on a lot of factors there.

grep-likes (looking at content)

There's a whole lot of recursive grep-like tools with different features, but most offer very limited features for finding files based on their metadata instead of their contents. Even if they are fast like ag (silversurfer), rg (ripgrep) or ugrep, they are not necessarily fast if just looking at file names.

- 465

- 6

- 11

-

-

@rogerdpack The tests have been mostly done with data on fast NVMe and in RAM. If file system structures are not in the cache, HDDs are much slower, but least so with locate (reading the whole database might just require a few seeks). – Thomas Luzat Sep 15 '21 at 16:23

-

Seems fair to add that the slower mlocate is more secure, only showing files that the user has access to. I don't need the security on my Linux desktop, so I'm switching. Thanks! – jbo5112 Dec 31 '21 at 15:20

-

When a directory has nested structure `find` will be very slow comparing to `fd` or `rg` because they run in parallel and `find` does not. On 70k nested dirs (max-depth: 3; size: 6.5G) times in sec are 18.38 (`find`), 4.58 (`rg`), 4.55 (`fd`). – hrvoj3e Jan 12 '22 at 13:05

-

`plocate` might be faster but doesn't have `--transliterate` option (very [useful for non-English users](https://askubuntu.com/questions/908881/search-with-diacritics-accents-characters-with-locate-command)) – Pablo A Dec 25 '22 at 19:03

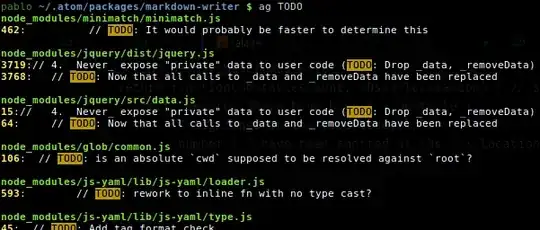

The Silver Searcher

You might found it useful for searching very fast the content of a huge number of source code files. Just type ag <keyword>.

Here some of the output of my apt show silversearcher-ag:

- Package: silversearcher-ag

- Maintainer: Hajime Mizuno

- Homepage: https://github.com/ggreer/the_silver_searcher

- Description: very fast grep-like program, alternative to ack-grep The Silver Searcher is grep-like program implemented by C. An attempt to make something better than ack-grep. It searches pattern about 3–5x faster than ack-grep. It ignores file patterns from your .gitignore and .hgignore.

I usually use it with:

-G--file-search-regex PATTERNOnly search files whose names match PATTERN.

ag -G "css$" important

- 1,470

- 13

- 21

-

4the [ripgrep's](https://github.com/BurntSushi/ripgrep) algorythm is allegedly faster than silversearch, and it also honors `.gitignore` files and skips `.git`, `.svn`, `.hg`.. folders. – ccpizza Mar 24 '18 at 21:07

-

@ccpizza So? [The Silver Searcher](https://github.com/ggreer/the_silver_searcher) also honors `.gitignore` and ignores hidden and binary files by default. Also have more contributors, more stars on Github (14700 vs 8300) and is already on repos of mayor distros. Please provide an updated reliable third-party source comparison. Nonetheless, `ripgrep` looks a great piece of software. – Pablo A Mar 24 '18 at 23:35

-

good to know! i'm not affiliated with author(s) of `ripgrep` in any way, it just fit my requirement so I stopped searching for other options. – ccpizza Mar 24 '18 at 23:38

-

2The silver searcher respects `.gitignore` too. That said, `rg` is absolutely amazing. First off, it has unicode support. In my experience `rg` consistently at least twice as fast as `ag` (YMMV), I guess it's due to Rust's regex parser, that obviously wasn't ready yet back in the years `ag` was new. `rg` can give deterministic output (but doesn't by default), it can blacklist file types where `ag` can only whitelist, it can ignore files based on size (bye bye logs). I still use `ag` in case I need multiline matching, which `rg` can't do. – Pelle Apr 04 '19 at 00:43

Another easy solution is to use newer extended shell globbing. To enable:

- bash: shopt -s globstar

- ksh: set -o globstar

- zsh: already enabled

Then, you can run commands like this in the top-level source directory:

# grep through all c files

grep printf **/*.c

# grep through all files

grep printf ** 2>/dev/null

This has the advantage that it searches recursively through all subdirectories and is very fast.

- 141

- 3

If the file/directory you're looking for is likely to be "shallow" (ie. not that many layers deep), you can speed up your search a lot by using a breadth-first search tool like bfs.

If you know the target is not in a given directory, you can also exclude directories as shown here.

- 316

- 2

- 8