I was copying a few very very large files to my computer. In total, 28 files and roughly 200GB (meaning each one was . I noticed that it was going at more or less the same speeds the entire time:

However, transferring multiple small files has much larger fluctuations in speeds:

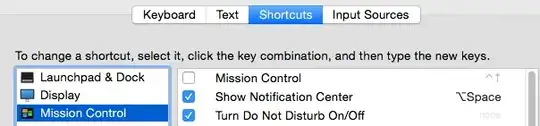

(Yes, this is from google drive but they're all locally downloaded to my computer)