I have two folders containing various files. Some of the files from the first folder have an exact copy in the second folder. I would like to replace those with a hard link. How can I do that?

-

2Please provide OS and filesystem. – Steven May 04 '15 at 20:24

-

1Well, I use ext4 on ubuntu 15.04, but if someone provides an answer for another OS, I am sure it can be helpful for someone reading this question. – qdii May 04 '15 at 20:34

-

Here is a [duplicate question on Unix.SE](https://unix.stackexchange.com/q/3037). – Alexey May 04 '18 at 08:46

5 Answers

I know of 4 command-line solutions for linux. My preferred one is the last one listed here, rdfind, because of all the options available.

fdupes

- This appears to be the most recommended/most well-known one.

- It's the simplest to use, but its only action is to delete duplicates.

- To ensure duplicates are actually duplicates (while not taking forever to run), comparisons between files are done first by file size, then md5 hash, then bye-by-byte comparison.

Sample output (with options "show size", "recursive"):

$ fdupes -Sr .

17 bytes each:

./Dir1/Some File

./Dir2/SomeFile

hardlink

- Designed to, as the name indicates, replace found files with hardlinks.

- Has a

--dry-runoption. - Does not indicate how contents are compared, but unlike all other options, does take into account file mode, owner, and modified time.

Sample output (note how my two files have slightly different modified times, so in the second run I tell it to ignore that):

$ stat Dir*/* | grep Modify

Modify: 2015-09-06 23:51:38.784637949 -0500

Modify: 2015-09-06 23:51:47.488638188 -0500

$ hardlink --dry-run -v .

Mode: dry-run

Files: 5

Linked: 0 files

Compared: 0 files

Saved: 0 bytes

Duration: 0.00 seconds

$ hardlink --dry-run -v -t .

[DryRun] Linking ./Dir2/SomeFile to ./Dir1/Some File (-17 bytes)

Mode: dry-run

Files: 5

Linked: 1 files

Compared: 1 files

Saved: 17 bytes

Duration: 0.00 seconds

duff

- Made to find files that the user then acts upon; has no actions available.

- Comparisons are done by file size, then sha1 hash.

- Hash can be changed to sha256, sha384, or sha512.

- Hash can be disabled to do a byte-by-byte comparison

Sample output (with option "recursive"):

$ duff -r .

2 files in cluster 1 (17 bytes, digest 34e744e5268c613316756c679143890df3675cbb)

./Dir2/SomeFile

./Dir1/Some File

rdfind

- Options have an unusual syntax (meant to mimic

find?). - Several options for actions to take on duplicate files (delete, make symlinks, make hardlinks).

- Has a dry-run mode.

- Comparisons are done by file size, then first-bytes, then last-bytes, then either md5 (default) or sha1.

- Ranking of files found makes it predictable which file is considered the original.

Sample output:

$ rdfind -dryrun true -makehardlinks true .

(DRYRUN MODE) Now scanning ".", found 5 files.

(DRYRUN MODE) Now have 5 files in total.

(DRYRUN MODE) Removed 0 files due to nonunique device and inode.

(DRYRUN MODE) Now removing files with zero size from list...removed 0 files

(DRYRUN MODE) Total size is 13341 bytes or 13 kib

(DRYRUN MODE) Now sorting on size:removed 3 files due to unique sizes from list.2 files left.

(DRYRUN MODE) Now eliminating candidates based on first bytes:removed 0 files from list.2 files left.

(DRYRUN MODE) Now eliminating candidates based on last bytes:removed 0 files from list.2 files left.

(DRYRUN MODE) Now eliminating candidates based on md5 checksum:removed 0 files from list.2 files left.

(DRYRUN MODE) It seems like you have 2 files that are not unique

(DRYRUN MODE) Totally, 17 b can be reduced.

(DRYRUN MODE) Now making results file results.txt

(DRYRUN MODE) Now making hard links.

hardlink ./Dir1/Some File to ./Dir2/SomeFile

Making 1 links.

$ cat results.txt

# Automatically generated

# duptype id depth size device inode priority name

DUPTYPE_FIRST_OCCURRENCE 1 1 17 2055 24916405 1 ./Dir2/SomeFile

DUPTYPE_WITHIN_SAME_TREE -1 1 17 2055 24916406 1 ./Dir1/Some File

# end of file

- 442

- 5

- 9

-

1"then either md5 (default) or sha1." [That doesn't mean the files are identical.](http://th.informatik.uni-mannheim.de/people/lucks/HashCollisions/) Since computing a hash requires the program to read the entire file anyway, it should just compare the entire files byte-for-byte. Saves CPU time, too. – endolith Jan 21 '16 at 22:05

-

@endolith That's why you always start with dry-run, to see what would happen... – Izkata Jan 22 '16 at 15:02

-

1But the point of the software is to identify duplicate files for you. If you have to manually double-check that the files are actually duplicates, then it's no good. – endolith Jan 22 '16 at 15:09

-

1@endolith [You should do that anyway, with all of these](http://rmlint.readthedocs.org/en/latest/cautions.html) – Izkata Jan 22 '16 at 15:16

-

Yeah I use AllDup in Windows and it graphically shows all the matches, their properties, and lets you look through for potential issues before deleting/hardlinking. I can't find anything like it for Linux. – endolith Jan 22 '16 at 15:27

-

5If you have _n_ files with identical size, first-bytes, _and_ end-bytes, but they're all otherwise different, determining that by direct comparison requires _n_! pair comparisons. Hashing them all then comparing hashes is likely to be much faster, especially for large files and/or large numbers of files. Any that pass that filter can go on to do direct comparisons to verify. (Or just use a better hash to start.) – Alan De Smet Mar 08 '17 at 21:52

-

If you trust all of the people who might write files involves (easiest if it's just you) and none of those people has reason to intentionally collect files with identical hashes, MD5 or SHA1 are probably more than good enough to identical identical files. The risk of accidental collision is vanishingly small. Not useful for random home directories, but fine for your own specialized collections of, say, audio, video, photos, particle accelerator models, or radiative transfer models. – Alan De Smet Mar 08 '17 at 21:58

-

Certainly is better to use hashes to search instead of byte-to-byte comparison as @AlanDeSmet wrote. Even is better when you can save the hashes in a hash table an then only verify the modified files after its insertion in that table. There's a way to generate a hash table using the cli? Something like `"hash", "filename", "mtime"`. – Andrés Morales May 04 '18 at 17:46

-

@AndrésMorales As stated in the answer, that's how these work already. Only for ones that have the same hash do some of them double-check with a byte-by-byte comparison. – Izkata May 04 '18 at 19:30

-

@Izkata, I understand. But when a command is invoked many times (in different moments, of course), the hash table is preserved? Or is recreated? If the table is preserved, it could be automated (in a cron task or after system started). – Andrés Morales May 04 '18 at 19:38

-

Do you know why running several times `rdfind -deleteduplicates true -makehardlinks true

` keeps creating links? Why it does not create all the links in the first pass? – Luis A. Florit Jul 17 '19 at 16:32 -

[jdupes](https://github.com/jbruchon/jdupes) was not available at the original time of this answer, but it is a much better choice than fdupes from which it was forked in 2015. Aside from much better performance, features like exclusion by date, hard linking, and BTRFS/XFS/APFS copy-on-write deduplication make life a lot easier when sifting through duplicates. jdupes does perform byte-for-byte comparison to ensure hash collisions can't cause data loss. – Jody Bruchon Jul 10 '20 at 20:04

I highly recommend jdupes. It is an enhanced fork of fdupes, but also includes:

- a bunch of new command-line options — including

--linkhard, or-Lfor short - native support for all major OS platforms

- speed said to be over 7 times faster than fdupes on average

For your question, you can just execute $ jdupes -L /path/to/your/files.

You may want to clone and build the latest source from its GitHub repo since the project is still under active development.

Windows binaries are also provided here. Packaged binaries are available in some Linux / BSD distros — actually I first found it through $ apt search.

- 454

- 4

- 11

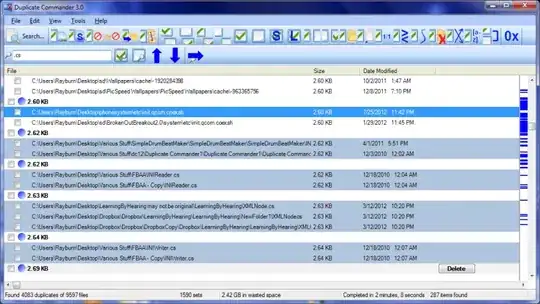

Duplicate Commander is a possible solution on Windows:

Duplicate Commander is a freeware application that allows you to find and manage duplicate files on your PC. Duplicate Commander comes with many features and tools that allow you to recover your disk space from those duplicates.

Features:

Replacing files with hard links Replacing files with soft links ... (and many more) ...

For Linux you can find a Bash script here.

-

Link no longer working for DC. Do you have an update perchance? – RockPaperLz- Mask it or Casket Oct 24 '20 at 03:29

Duplicate & Same File Searcher is yet another solution on Windows:

Duplicate & Same Files Searcher (Duplicate Searcher) is an application for searching duplicate files (clones) and NTFS hard links to the same file. It searches duplicate file contents regardless of file name (true byte-to-byte comparison is used). This application allows not only to delete duplicate files or to move them to another location, but to replace duplicates with NTFS hard links as well (unique!)

- 261

- 1

- 5

I had a nifty free tool on my computer called Link Shell Extension; not only was it great for creating Hard Links and Symbolic Links, but Junctions too! In addition, it added custom icons that allow you to easily identify different types of links, even ones that already existed prior to installation; Red Arrows represent Hard Links for instance, while Green represent Symbolic Links... and chains represent Junctions.

I unfortunately uninstalled the software a while back (in a mass-uninstallation of various programs), so I can't create anymore links manually, but the icons still show up automatically whenever Windows detects a Hard, Symbolic or Junction link.

- 21

- 2

-

LSE can be downloaded from here: https://schinagl.priv.at/nt/hardlinkshellext/linkshellextension.html – azrdev Aug 19 '22 at 20:08